Introduction

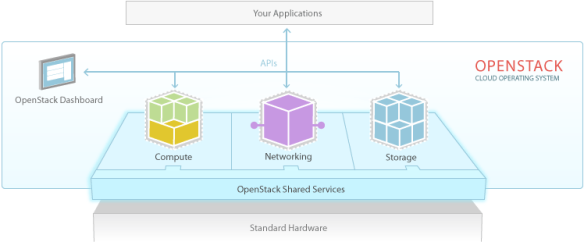

OpenStack is one of the most popular open source cloud computing projects to provide Infrastructure as a Service solution. Its key core components are Compute (Nova), Networking (Neutron, formerly known as Quantum), Storage (object and block storage, Swift and Cinder, respectively), Openstack Dashboard (Horizon), Identity Service (Keystone) and Image Servie (Glance).

There are other official incubated projects like Metering (Celiometer) and Orchestration and Service Definition (Heat).

Savanna is a Hadoop as a Service for OpenStack introduced by Mirantis. It is still in an early phase (v.02 has been released in Summer, 2013) and according to its roadmap version 1.0 is targeted for official OpenStack incubation. In principle, Heat also could be used for Hadoop cluster provisioning but Savanna is especially tuned for providing Hadoop specific API functionality while Heat is meant to be used for generic purpose .

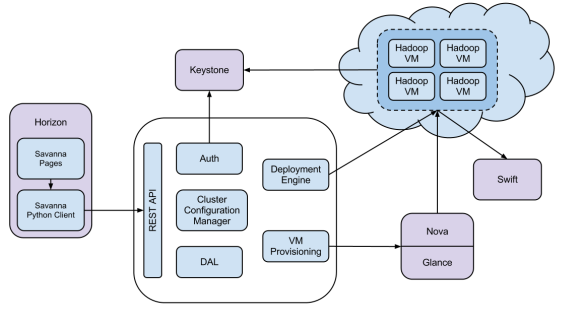

Savanna Architecture

Savanna is integrated with the core OpenStack components such as Keystone, Nova, Glance, Swift and Horizon. It has a REST API that supports the Hadoop cluster provisioning steps.

Savanna API is implemented as a WSGI server that, by default, listens to port 8386. In addition, Savanna can also be integrated with Horizon, the OpenStack Dashboard to create a Hadoop cluster from the management console. Savanna also comes with a Vanilla plugin that deploys a Hadoop cluster image. The standard out-of-the-box Vanilla plugin supports Hadoop 1.1.2 version.

Installing Savanna

The simplest option to try out Savanna is to use devstack in a virtual machine. I was using an Ubuntu 12.04 virtual instance in my tests. In that environment we need to execute the following commands to install devstack and Savanna API:

$ sudo apt-get install git-core $ git clone https://github.com/openstack-dev/devstack.git $ vi localrc # edit localrc ADMIN_PASSWORD=nova MYSQL_PASSWORD=nova RABBIT_PASSWORD=nova SERVICE_PASSWORD=$ADMIN_PASSWORD SERVICE_TOKEN=nova # Enable Swift ENABLED_SERVICES+=,swift SWIFT_HASH=66a3d6b56c1f479c8b4e70ab5c2000f5 SWIFT_REPLICAS=1 SWIFT_DATA_DIR=$DEST/data # Force checkout prerequsites # FORCE_PREREQ=1 # keystone is now configured by default to use PKI as the token format which produces huge tokens. # set UUID as keystone token format which is much shorter and easier to work with. KEYSTONE_TOKEN_FORMAT=UUID # Change the FLOATING_RANGE to whatever IPs VM is working in. # In NAT mode it is subnet VMWare Fusion provides, in bridged mode it is your local network. FLOATING_RANGE=192.168.55.224/27 # Enable auto assignment of floating IPs. By default Savanna expects this setting to be enabled EXTRA_OPTS=(auto_assign_floating_ip=True) # Enable logging SCREEN_LOGDIR=$DEST/logs/screen $ ./stack.sh # this will take a while to execute $ sudo apt-get install python-setuptools python-virtualenv python-dev $ virtualenv savanna-venv $ savanna-venv/bin/pip install savanna $ mkdir savanna-venv/etc $ cp savanna-venv/share/savanna/savanna.conf.sample savanna-venv/etc/savanna.conf # To start Savanna API: $ savanna-venv/bin/python savanna-venv/bin/savanna-api --config-file savanna-venv/etc/savanna.conf

To install Savanna UI integrated with Horizon, we need to run the following commands:

$ sudo pip install savanna-dashboard

$ cd /opt/stack/horizon/openstack-dashboard

$ vi settings.py

HORIZON_CONFIG = {

'dashboards': ('nova', 'syspanel', 'settings', 'savanna'),

INSTALLED_APPS = (

'savannadashboard',

....

$ cd /opt/stack/horizon/openstack-dashboard/local

$ vi local_settings.py

SAVANNA_URL = 'http://localhost:8386/v1.0'

$ sudo service apache2 restart

Provisioning a Hadoop cluster

As a first step, we need to configure Keystone related environment variables to get the authentication token:

ubuntu@ip-10-59-33-68:~$ vi .bashrc $ export OS_AUTH_URL=http://127.0.0.1:5000/v2.0/ $ export OS_TENANT_NAME=admin $ export OS_USERNAME=admin $ export OS_PASSWORD=nova ubuntu@ip-10-59-33-68:~$ source .bashrc ubuntu@ip-10-59-33-68:~$ ubuntu@ip-10-59-33-68:~$ env | grep OS OS_PASSWORD=nova OS_AUTH_URL=http://127.0.0.1:5000/v2.0/ OS_USERNAME=admin OS_TENANT_NAME=admin ubuntu@ip-10-59-33-68:~$ keystone token-get +-----------+----------------------------------+ | Property | Value | +-----------+----------------------------------+ | expires | 2013-08-09T20:31:12Z | | id | bdb582c836e3474f979c5aa8f844c000 | | tenant_id | 2f46e214984f4990b9c39d9c6222f572 | | user_id | 077311b0a8304c8e86dc0dc168a67091 | +-----------+----------------------------------+ $ export AUTH_TOKEN="bdb582c836e3474f979c5aa8f844c000" $ export TENANT_ID="2f46e214984f4990b9c39d9c6222f572"

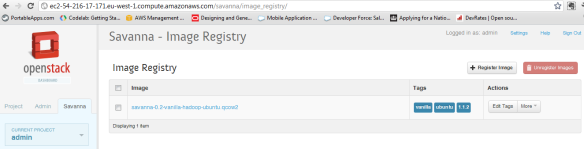

Then we need to create the Glance image that we want to use for our Hadoop cluster. In our example we have used Mirantis’s vanilla image but we can also build our own image:

$ wget http://savanna-files.mirantis.com/savanna-0.2-vanilla-1.1.2-ubuntu-12.10.qcow2 $ glance image-create --name=savanna-0.2-vanilla-hadoop-ubuntu.qcow2 --disk-format=qcow2 --container-format=bare < ./savanna-0.2-vanilla-1.1.2-ubuntu-12.10.qcow2 ubuntu@ip-10-59-33-68:~/devstack$ glance image-list +--------------------------------------+-----------------------------------------+-------------+------------------+-----------+--------+ | ID | Name | Disk Format | Container Format | Size | Status | +--------------------------------------+-----------------------------------------+-------------+------------------+-----------+--------+ | d0d64f5c-9c15-4e7b-ad4c-13859eafa7b8 | cirros-0.3.1-x86_64-uec | ami | ami | 25165824 | active | | fee679ee-e0c0-447e-8ebd-028050b54af9 | cirros-0.3.1-x86_64-uec-kernel | aki | aki | 4955792 | active | | 1e52089b-930a-4dfc-b707-89b568d92e7e | cirros-0.3.1-x86_64-uec-ramdisk | ari | ari | 3714968 | active | | d28051e2-9ddd-45f0-9edc-8923db46fdf9 | savanna-0.2-vanilla-hadoop-ubuntu.qcow2 | qcow2 | bare | 551699456 | active | +--------------------------------------+-----------------------------------------+-------------+------------------+-----------+--------+ $ export IMAGE_ID=d28051e2-9ddd-45f0-9edc-8923db46fdf9

Then we have installed httpie, an open source HTTP client that can be used to send REST requests to Savanna API:

$ sudo pip install httpie

From now on we will use httpie to send Savanna commands. We need to register the image with Savanna:

$ export SAVANNA_URL="http://localhost:8386/v1.0/$TENANT_ID"

$ http POST $SAVANNA_URL/images/$IMAGE_ID X-Auth-Token:$AUTH_TOKEN username=ubuntu

HTTP/1.1 202 ACCEPTED

Content-Length: 411

Content-Type: application/json

Date: Thu, 08 Aug 2013 21:28:07 GMT

{

"image": {

"OS-EXT-IMG-SIZE:size": 551699456,

"created": "2013-08-08T21:05:55Z",

"description": "None",

"id": "d28051e2-9ddd-45f0-9edc-8923db46fdf9",

"metadata": {

"_savanna_description": "None",

"_savanna_username": "ubuntu"

},

"minDisk": 0,

"minRam": 0,

"name": "savanna-0.2-vanilla-hadoop-ubuntu.qcow2",

"progress": 100,

"status": "ACTIVE",

"tags": [],

"updated": "2013-08-08T21:28:07Z",

"username": "ubuntu"

}

}

$ http $SAVANNA_URL/images/$IMAGE_ID/tag X-Auth-Token:$AUTH_TOKEN tags:='["vanilla", "1.1.2", "ubuntu"]'

HTTP/1.1 202 ACCEPTED

Content-Length: 532

Content-Type: application/json

Date: Thu, 08 Aug 2013 21:29:25 GMT

{

"image": {

"OS-EXT-IMG-SIZE:size": 551699456,

"created": "2013-08-08T21:05:55Z",

"description": "None",

"id": "d28051e2-9ddd-45f0-9edc-8923db46fdf9",

"metadata": {

"_savanna_description": "None",

"_savanna_tag_1.1.2": "True",

"_savanna_tag_ubuntu": "True",

"_savanna_tag_vanilla": "True",

"_savanna_username": "ubuntu"

},

"minDisk": 0,

"minRam": 0,

"name": "savanna-0.2-vanilla-hadoop-ubuntu.qcow2",

"progress": 100,

"status": "ACTIVE",

"tags": [

"vanilla",

"ubuntu",

"1.1.2"

],

"updated": "2013-08-08T21:29:25Z",

"username": "ubuntu"

}

}

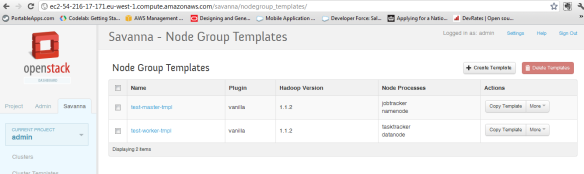

Then we need to create a nodegroup templates (json files) that will be sent to Savanna. There is one template for the master nodes (namenode, jobtracker) and another template for the worker nodes such as datanode and tasktracker. The Hadoop version is 1.1.2

$ vi ng_master_template_create.json

{

"name": "test-master-tmpl",

"flavor_id": "2",

"plugin_name": "vanilla",

"hadoop_version": "1.1.2",

"node_processes": ["jobtracker", "namenode"]

}

$ vi ng_worker_template_create.json

{

"name": "test-worker-tmpl",

"flavor_id": "2",

"plugin_name": "vanilla",

"hadoop_version": "1.1.2",

"node_processes": ["tasktracker", "datanode"]

}

$ http $SAVANNA_URL/node-group-templates X-Auth-Token:$AUTH_TOKEN < ng_master_template_create.json

HTTP/1.1 202 ACCEPTED

Content-Length: 387

Content-Type: application/json

Date: Thu, 08 Aug 2013 21:58:00 GMT

{

"node_group_template": {

"created": "2013-08-08T21:58:00",

"flavor_id": "2",

"hadoop_version": "1.1.2",

"id": "b3a79c88-b6fb-43d2-9a56-310218c66f7c",

"name": "test-master-tmpl",

"node_configs": {},

"node_processes": [

"jobtracker",

"namenode"

],

"plugin_name": "vanilla",

"updated": "2013-08-08T21:58:00",

"volume_mount_prefix": "/volumes/disk",

"volumes_per_node": 0,

"volumes_size": 10

}

}

$ http $SAVANNA_URL/node-group-templates X-Auth-Token:$AUTH_TOKEN < ng_worker_template_create.json

HTTP/1.1 202 ACCEPTED

Content-Length: 388

Content-Type: application/json

Date: Thu, 08 Aug 2013 21:59:41 GMT

{

"node_group_template": {

"created": "2013-08-08T21:59:41",

"flavor_id": "2",

"hadoop_version": "1.1.2",

"id": "773b2cfb-1e05-46f4-923f-13edc7d6aac6",

"name": "test-worker-tmpl",

"node_configs": {},

"node_processes": [

"tasktracker",

"datanode"

],

"plugin_name": "vanilla",

"updated": "2013-08-08T21:59:41",

"volume_mount_prefix": "/volumes/disk",

"volumes_per_node": 0,

"volumes_size": 10

}

}

The next step is to define the cluster template:

$ vi cluster_template_create.json

{

"name": "demo-cluster-template",

"plugin_name": "vanilla",

"hadoop_version": "1.1.2",

"node_groups": [

{

"name": "master",

"node_group_template_id": "b3a79c88-b6fb-43d2-9a56-310218c66f7c",

"count": 1

},

{

"name": "workers",

"node_group_template_id": "773b2cfb-1e05-46f4-923f-13edc7d6aac6",

"count": 2

}

]

}

http $SAVANNA_URL/cluster-templates X-Auth-Token:$AUTH_TOKEN < cluster_template_create.json

HTTP/1.1 202 ACCEPTED

Content-Length: 815

Content-Type: application/json

Date: Fri, 09 Aug 2013 07:04:24 GMT

{

"cluster_template": {

"anti_affinity": [],

"cluster_configs": {},

"created": "2013-08-09T07:04:24",

"hadoop_version": "1.1.2",

"id": "{

"name": "cluster-1",

"plugin_name": "vanilla",

"hadoop_version": "1.1.2",

"cluster_template_id" : "64c4117b-acee-4da7-937b-cb964f0471a9",

"user_keypair_id": "stack",

"default_image_id": "3f9fc974-b484-4756-82a4-bff9e116919b"

}",

"name": "demo-cluster-template",

"node_groups": [

{

"count": 1,

"flavor_id": "2",

"name": "master",

"node_configs": {},

"node_group_template_id": "b3a79c88-b6fb-43d2-9a56-310218c66f7c",

"node_processes": [

"jobtracker",

"namenode"

],

"volume_mount_prefix": "/volumes/disk",

"volumes_per_node": 0,

"volumes_size": 10

},

{

"count": 2,

"flavor_id": "2",

"name": "workers",

"node_configs": {},

"node_group_template_id": "773b2cfb-1e05-46f4-923f-13edc7d6aac6",

"node_processes": [

"tasktracker",

"datanode"

],

"volume_mount_prefix": "/volumes/disk",

"volumes_per_node": 0,

"volumes_size": 10

}

],

"plugin_name": "vanilla",

"updated": "2013-08-09T07:04:24"

}

}

Now we are ready to create the Hadoop cluster:

$ vi cluster_create.json

{

"name": "cluster-1",

"plugin_name": "vanilla",

"hadoop_version": "1.1.2",

"cluster_template_id" : "64c4117b-acee-4da7-937b-cb964f0471a9",

"user_keypair_id": "savanna",

"default_image_id": "d28051e2-9ddd-45f0-9edc-8923db46fdf9"

}

$ http $SAVANNA_URL/clusters X-Auth-Token:$AUTH_TOKEN < cluster_create.json

HTTP/1.1 202 ACCEPTED

Content-Length: 1153

Content-Type: application/json

Date: Fri, 09 Aug 2013 07:28:14 GMT

{

"cluster": {

"anti_affinity": [],

"cluster_configs": {},

"cluster_template_id": "64c4117b-acee-4da7-937b-cb964f0471a9",

"created": "2013-08-09T07:28:14",

"default_image_id": "d28051e2-9ddd-45f0-9edc-8923db46fdf9",

"hadoop_version": "1.1.2",

"id": "d919f1db-522f-45ab-aadd-c078ba3bb4e3",

"info": {},

"name": "cluster-1",

"node_groups": [

{

"count": 1,

"created": "2013-08-09T07:28:14",

"flavor_id": "2",

"instances": [],

"name": "master",

"node_configs": {},

"node_group_template_id": "b3a79c88-b6fb-43d2-9a56-310218c66f7c",

"node_processes": [

"jobtracker",

"namenode"

],

"updated": "2013-08-09T07:28:14",

"volume_mount_prefix": "/volumes/disk",

"volumes_per_node": 0,

"volumes_size": 10

},

{

"count": 2,

"created": "2013-08-09T07:28:14",

"flavor_id": "2",

"instances": [],

"name": "workers",

"node_configs": {},

"node_group_template_id": "773b2cfb-1e05-46f4-923f-13edc7d6aac6",

"node_processes": [

"tasktracker",

"datanode"

],

"updated": "2013-08-09T07:28:14",

"volume_mount_prefix": "/volumes/disk",

"volumes_per_node": 0,

"volumes_size": 10

}

],

"plugin_name": "vanilla",

"status": "Validating",

"updated": "2013-08-09T07:28:14",

"user_keypair_id": "savanna"

}

}

After a while we can run nova command to check if the instances are created and running:

$ nova list +--------------------------------------+-----------------------+--------+------------+-------------+----------------------------------+ | ID | Name | Status | Task State | Power State | Networks | +--------------------------------------+-----------------------+--------+------------+-------------+----------------------------------+ | 1a9f43bf-cddb-4556-877b-cc993730da88 | cluster-1-master-001 | ACTIVE | None | Running | private=10.0.0.2, 192.168.55.227 | | bb55f881-1f96-4669-a94a-58cbf4d88f39 | cluster-1-workers-001 | ACTIVE | None | Running | private=10.0.0.3, 192.168.55.226 | | 012a24e2-fa33-49f3-b051-9ee2690864df | cluster-1-workers-002 | ACTIVE | None | Running | private=10.0.0.4, 192.168.55.225 | +--------------------------------------+-----------------------+--------+------------+-------------+----------------------------------+

Now we can login to the Hadoop master instance and run the required Hadoop commands:

$ ssh -i savanna.pem ubuntu@10.0.0.2 $ sudo chmod 777 /usr/share/hadoop $ sudo su hadoop $ cd /usr/share/hadoop $ hadoop jar hadoop-example-1.1.2.jar pi 10 100

Savanna UI via Horizon

In order to create nodegroup templates, to create cluster template and to create the cluster itself we have used a command line tool – httpie – to send REST API calls. The same functionality is also availabe via Horizon, the standard OpenStack dashboard.

First we need to register the image with Savanna:

Then we need to create the nodegroup templates:

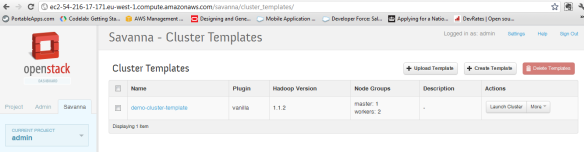

After that we have to create the cluster template:

After that we have to create the cluster template:

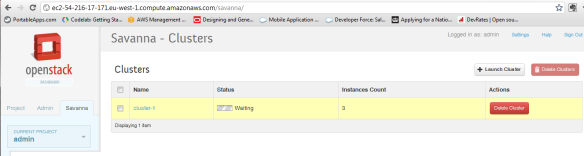

And finally we have to create the cluster:

Pingback: OpenStack Savanna – Fast Hadoop Cluster Provisioning on OpenStack | Dan Gorman's Technology News

Hello Sir,

I am getting following error while sending savanna commands.

omkar@ubuntu:~$ http POST $SAVANNA_URL/images/$IMAGE_ID X-Auth-Token:$AUTH_TOKEN username=ubuntu

HTTP/1.1 401 Unauthorized

Content-Length: 276

Content-Type: text/plain; charset=UTF-8

Date: Tue, 03 Sep 2013 11:24:06 GMT

Www-Authenticate: Keystone uri=’http://127.0.0.1:35357′

401 Unauthorized

This server could not verify that you are authorized to access the document you requested. Either you supplied the wrong credentials (e.g., bad password), or your browser does not understand how to supply the credentials required.

Authentication required

From the error message I guess you are using a wrong authentication token. This can be retrieved using keystone token-get, see the relevant section from the post:

$ keystone token-get

+———–+———————————-+

| Property | Value |

+———–+———————————-+

| expires | 2013-08-09T20:31:12Z |

| id | bdb582c836e3474f979c5aa8f844c000 |

| tenant_id | 2f46e214984f4990b9c39d9c6222f572 |

| user_id | 077311b0a8304c8e86dc0dc168a67091 |

+———–+———————————-+

$ export AUTH_TOKEN=”bdb582c836e3474f979c5aa8f844c000″

$ export TENANT_ID=”2f46e214984f4990b9c39d9c6222f572″

Sir,

I checked all the entries, all are correct.

I am pasting my screen output :

omkar@ubuntu:~$ export OS_AUTH_URL=http://127.0.0.1:5000/v2.0/

omkar@ubuntu:~$ export OS_TENANT_NAME=admin

omkar@ubuntu:~$ export OS_USERNAME=admin

omkar@ubuntu:~$ export OS_PASSWORD=omkar

omkar@ubuntu:~$ source .bashrc

omkar@ubuntu:~$ keystone token-get

+———–+———————————-+

| Property | Value |

+———–+———————————-+

| expires | 2013-09-06T02:13:05Z |

| id | 0a744af19d6945a296f1d7989ede3115 |

| tenant_id | f56cef6884084b48aecebc980badec99 |

| user_id | b307c2134a8f436397d2ce30c2c77991 |

+———–+———————————-+

omkar@ubuntu:~$ export AUTH_TOKEN=”0a744af19d6945a296f1d7989ede3115″

omkar@ubuntu:~$ export TENANT_ID=”f56cef6884084b48aecebc980badec99″

omkar@ubuntu:~$ glance index

ID Name Disk Format Container Format Size

———————————— —————————— ——————– ——————– ————–

52a3d3cf-021e-4dba-b263-9e675a0701ec savanna qcow2 ovf 551699456

32b02694-636b-4c2f-b385-bb486c1a53d4 Ubuntu 12.04 LTS qcow2 ovf 226426880

omkar@ubuntu:~$ export IMAGE_ID=52a3d3cf-021e-4dba-b263-9e675a0701ec

omkar@ubuntu:~$ export SAVANNA_URL=”http://localhost:8386/v1.0/$TENANT_ID”

omkar@ubuntu:~$ http POST $SAVANNA_URL/images/$IMAGE_ID X-Auth-Token:$AUTH_TOKEN username=ubuntu

HTTP/1.1 401 Unauthorized

Content-Length: 276

Content-Type: text/plain; charset=UTF-8

Date: Thu, 05 Sep 2013 02:16:07 GMT

Www-Authenticate: Keystone uri=’http://127.0.0.1:35357′

401 Unauthorized

This server could not verify that you are authorized to access the document you requested. Either you supplied the wrong credentials (e.g., bad password), or your browser does not understand how to supply the credentials required.

Authentication required

omkar@ubuntu:~$

Please help me. I am stuck at this point.

Can you try the following:

$ http $SAVANNA_URL/images X-Auth-Token:$AUTH_TOKEN

And maybe this one (just to exclude any potential issues/hidden characters in the environment variables):

$ http http://localhost:8386/v1.0/f56cef6884084b48aecebc980badec99/images X-Auth-Token:0a744af19d6945a296f1d7989ede3115