This time I write about Google BigQuery, a service that Google have made publicly available in May, 2012. It was around for some time, some Google Research blog talked about it in 2010, then Google have announced a limited preview in November, 2011 and eventually it went live this month.

The technology is based on Dremel, not MapReduce. The reason for having an alternative to MapReduce is described in the Dremel paper: “Dremel can execute many queries over such data that would ordinarily require a sequence of MapReduce … jobs, but at a fraction of the execution time. Dremel is not intended as a replacement for MR and is often used in conjunction with it to analyze outputs of MR pipelines or rapidly prototype larger computations“.

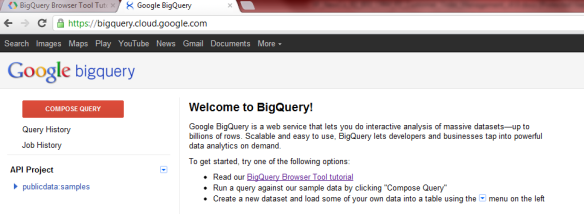

So what is BigQuery? As it is answered on Google BigQuery website: “Google BigQuery is a web service that lets you do interactive analysis of massive datasets—up to billions of rows.”

Getting Started with BigQuery

In order to be able to use BigQuery, first you need to sign up for it via Google API console. Once that is done, you can start using the service. The easiest way to start with is BigQuery Browser Tool.

BigQuery Browser Tool

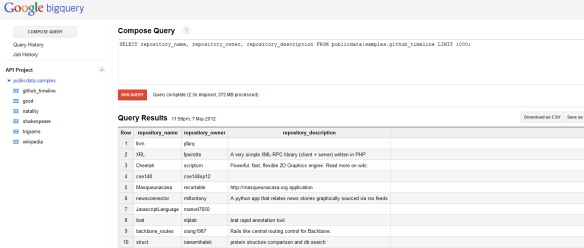

When you first login to BigQuery Browser Tool, you see the following welcome message:

There is already a public dataset available, so you can have a quick look around and experience how to use BigQuery Browser Tool. E.g. here is the schema of github_timeline table, a snapshop from GitHub archive:

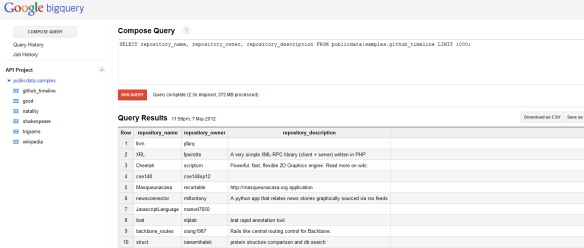

You can run a simple query using COMPOSE QUERY from the browser tool, the syntax is SQL-like:

SELECT repository_name, repository_onwer, repository_description FROM publicdata:samples.github_timeline LIMIT 1000;

So far so good… Let us create now our own tables. The dataset that I was using is from WorldBank Data Catalogue and these are GDP and population data for the countries all over the world. These are available in CSV format (as well as Excel and PDF).

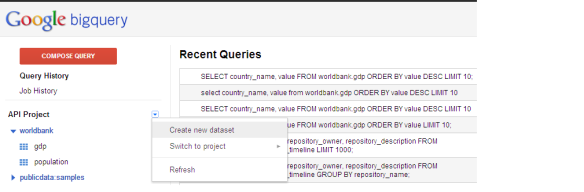

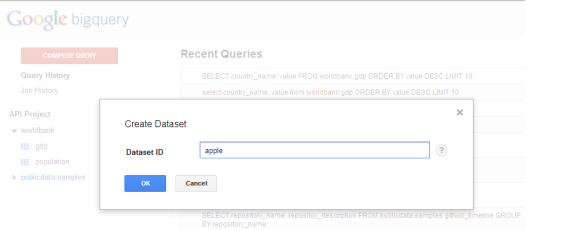

As a first step, we need to create the dataset – dataset is basically one or more tables in BigQuery. You need to click on the down-arrow icon, next to the API project and select “Create new dataset”.

Then you need to create the table. Click on the down-arrow for the dataset (worldbank in our case) and select “Create new table”

Then you need to define table parameters such as name, schema and source file to be uploaded. Note: Internet Explorer 8 does not seem to support CSV file upload (“”File upload is not currently supported in your browser.” message occurs for File upload link). You’d better go with Chrome that supports CSV file upload.

When you upload the file, you need to specify the schema in the following format: county_code:string,ranking:integer,country_name:string,value:integer

There are advanced option available, too: you can use e.g tab separated files instead of comma separated ones, you can defined how many invalid rows are accepted, how many rows are skipped, etc.

During the upload, the data is validated against the specified schema, if that is violated, then you will get error messages in the Job history. (e.g. “Too many columns: expected 4 column(s) but got 5 column(s)” )

Once the upload is successfully finished, you are ready to execute queries on the data. You can use COMPOSE QUERY for that, as we have already descibed for the github_timeline table. To display the TOP 10 countries having the highest GDP values, you run the following query:

SELECT country_name, value FROM worldbank.gdp ORDER BY value DESC LIMIT 10

BigQuery Command Line Tool

That was easy but we are hard-core software guys, aren’t we? We need command line, not just browser based functionality! Relax, there is BigQuery command line tool, written in python.

You can download it from here and install it by unzipping the file.

To install it, you just run: python setup.py install

I used BigQuery Command line tool from a Windows 7 machine, the usage is very same on Linux with the exception of where the credentials are stored in your local computer. (that could be ~/.bigquery.v2.token and ~/.bigqueryrc in case of Linux and %USERPROFILE%\.bigquery.v2.token and %USRPROFILE%\.bigqueryrc in case of Windows).

When you run it at the first time it needs to be authenticated via OAuth2.

C:\BigQuery\bigquery-2.0.4>python bq.py shell

******************************************************************

** No OAuth2 credentials found, beginning authorization process **

******************************************************************

Go to the following link in your browser:

https://accounts.google.com/o/oauth2/auth?scope=https%3A%2F%2Fwww.googleapis

.com%2Fauth%2Fbigquery&redirect_uri=urn%3Aietf%3Awg%3Aoauth%3A2.0%3Aoob&response

_type=code&client_id=123456789.apps.googleusercontent.com&access_type=offline

Enter verification code: *********

Authentication successful.

************************************************

** Continuing execution of BigQuery operation **

************************************************

Welcome to BigQuery! (Type help for more information.)

BigQuery> ls

projectId friendlyName

-------------- --------------

190120083879 API Project

BigQuery> exit

So at the first time, you need to go the the given URL with your browser, Allow Access to BigQuery Command Line tool and copy&paste the generated verification code at the “Enter verification code” prompt. Then it will be stored on your local machine, as mentioned above and you do not need to allow access from then on. (unless you want to initialize the entire access process)

So at the second attempt to run the BigQuery shell it will go flawless without authentication:

C:\BigQuery\bigquery-2.0.4>python bq.py shell

Welcome to BigQuery! (Type help for more information.)

BigQuery> ls

projectId friendlyName

-------------- --------------

190120083879 API Project

BigQuery> ls 190120083879

datasetId

-----------

worldbank

BigQuery> exit

Goodbye.

To check the schema for GDP and population tables (population table has the same schema as GDP and was also uploaded the same way as GDP- via BigQuery Browser tool):

C:\BigQuery\bigquery-2.0.4>python bq.py show 190120083879:worldbank.gdp

Table 190120083879:worldbank.gdp

Last modified Schema Total Rows Total Bytes

----------------- ------------------------- ------------ -------------

13 May 12:10:33 |- county_code: string 195 6265

|- ranking: integer

|- country_name: string

|- value: integer

C:\BigQuery\bigquery-2.0.4>python bq.py show 190120083879:worldbank.population

Table 190120083879:worldbank.population

Last modified Schema Total Rows Total Bytes

----------------- ------------------------- ------------ -------------

13 May 12:14:02 |- county_code: string 215 7007

|- ranking: integer

|- country_name: string

|- value: integer

To check the first 10 rows in population table (you may notice that the values are ordered, it is because that values were already ordered in the WorldBank CSV file):

C:\BigQuery\bigquery-2.0.4>python bq.py head -n 10 190120083879:worldbank.popula

tion

+-------------+---------+--------------------+---------+

| county_code | ranking | country_name | value |

+-------------+---------+--------------------+---------+

| CHN | 1 | China | 1338300 |

| IND | 2 | India | 1224615 |

| USA | 3 | United States | 309349 |

| IDN | 4 | Indonesia | 239870 |

| BRA | 5 | Brazil | 194946 |

| PAK | 6 | Pakistan | 173593 |

| NGA | 7 | Nigeria | 158423 |

| BGD | 8 | Bangladesh | 148692 |

| RUS | 9 | Russian Federation | 141750 |

| JPN | 10 | Japan | 127451 |

+-------------+---------+--------------------+---------+

In order to run a SELECT query against a table, first you need to initialize the project, so you have to have the .bigqueryrc properly configured:

C:\Users\istvan>type .bigqueryrc

project_id = 190120083879

credential_file = c:\Users\istvan\.bigquery.v2.token

dataset_id = worldbank

C:\Users\istvan>

Then you can run:

C:\BigQuery\bigquery-2.0.4>python bq.py query "SELECT country_name, value FROM w

orldbank.gdp ORDER BY value DESC LIMIT 10"

Waiting on job_5745d8eb41cf489fbf6ffb7a3bc3487e ... (0s) Current status: RUNNING

Waiting on job_5745d8eb41cf489fbf6ffb7a3bc3487e ... (0s) Current status: DONE

+----------------+----------+

| country_name | value |

+----------------+----------+

| United States | 14586736 |

| China | 5926612 |

| Japan | 5458837 |

| Germany | 3280530 |

| France | 2560002 |

| United Kingdom | 2261713 |

| Brazil | 2087890 |

| Italy | 2060965 |

| India | 1727111 |

| Canada | 1577040 |

+----------------+----------+

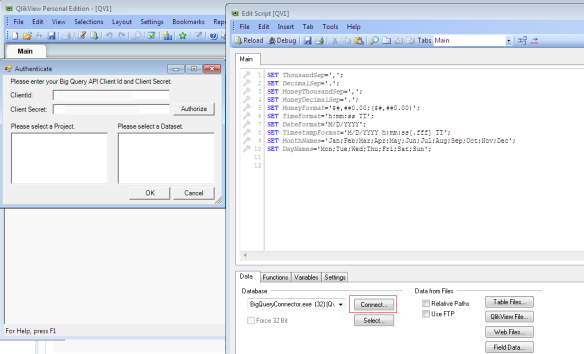

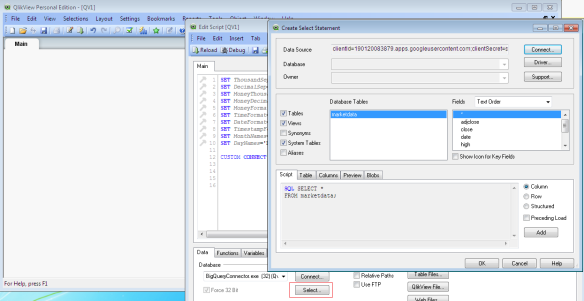

BigQuery API

BigQuery browser tool and command line tool could do in most of the cases. but hell, aren’t we even thougher guys – Master of the APIs? If yes, Google BigQuery can offer APIs and BigQuery client libraries for us, too. These can be in Python, Java, .NET, PHP, Ruby, Objective-C, etc, etc.

Here is a python application that runs the same SELECT query that we used from browser tool and command line:

import httplib2

import sys

import pprint

from apiclient.discovery import build

from apiclient.errors import HttpError

from oauth2client.client import AccessTokenRefreshError

from oauth2client.client import OAuth2WebServerFlow

from oauth2client.file import Storage

from oauth2client.tools import run

FLOW = OAuth2WebServerFlow(

client_id='123456789.apps.googleusercontent.com',

client_secret='*************',

scope='https://www.googleapis.com/auth/bigquery',

user_agent='bq/2.0')

# Run a synchronous query

def runSyncQuery (service, projectId, datasetId, timeout=0):

try:

print 'timeout:%d' % timeout

jobCollection = service.jobs()

queryData = {'query':'SELECT country_name, value FROM worldbank.gdp ORDER BY value DESC LIMIT 10;',

'timeoutMs':timeout}

queryReply = jobCollection.query(projectId=projectId,

body=queryData).execute()

jobReference=queryReply['jobReference']

# Timeout exceeded: keep polling until the job is complete.

while(not queryReply['jobComplete']):

print 'Job not yet complete...'

queryReply = jobCollection.getQueryResults(

projectId=jobReference['projectId'],

jobId=jobReference['jobId'],

timeoutMs=timeout).execute()

pprint.pprint(queryReply)

except AccessTokenRefreshError:

print ("The credentials have been revoked or expired, please re-run"

"the application to re-authorize")

except HttpError as err:

print 'Error in runSyncQuery:', pprint.pprint(err.content)

except Exception as err:

print 'Undefined error' % err

def main():

# If the credentials don't exist or are invalid, run the native client

# auth flow. The Storage object will ensure that if successful the good

# credentials will get written back to a file.

storage = Storage('c:\Users\istvan\.bigquery.v2.token') # Choose a file name to store the credentials.

credentials = storage.get()

if credentials is None or credentials.invalid:

credentials = run(FLOW, storage)

# Create an httplib2.Http object to handle our HTTP requests and authorize it

# with our good credentials.

http = httplib2.Http()

http = credentials.authorize(http)

service = build("bigquery", "v2", http=http)

# Now make calls

print 'Make call'

runSyncQuery(service, projectId='190120083879', datasetId='worldbank')

if __name__ == '__main__':

main(

The output will look like this:

C:\BigQuery\PythonClient>python bq_client.py

Make call

timeout:0

Job not yet complete...

Job not yet complete...

Job not yet complete...

{u'etag': u'"6wEDxP58PwCUv91kOlRB8L7rm_A/69KAOvEhHO4pBtqit7nlzybfIPc"',

u'jobComplete': True,

u'jobReference': {u'jobId': u'job_9a1c0d2bcf9443b18e2204d1f4db476a',

u'projectId': u'190120083879'},

u'kind': u'bigquery#getQueryResultsResponse',

u'rows': [{u'f': [{u'v': u'United States'}, {u'v': u'14586736'}]},

{u'f': [{u'v': u'China'}, {u'v': u'5926612'}]},

{u'f': [{u'v': u'Japan'}, {u'v': u'5458837'}]},

{u'f': [{u'v': u'Germany'}, {u'v': u'3280530'}]},

{u'f': [{u'v': u'France'}, {u'v': u'2560002'}]},

{u'f': [{u'v': u'United Kingdom'}, {u'v': u'2261713'}]},

{u'f': [{u'v': u'Brazil'}, {u'v': u'2087890'}]},

{u'f': [{u'v': u'Italy'}, {u'v': u'2060965'}]},

{u'f': [{u'v': u'India'}, {u'v': u'1727111'}]},

{u'f': [{u'v': u'Canada'}, {u'v': u'1577040'}]}],

u'schema': {u'fields': [{u'mode': u'NULLABLE',

u'name': u'country_name',

u'type': u'STRING'},

{u'mode': u'NULLABLE',

u'name': u'value',

u'type': u'INTEGER'}]},

u'totalRows': u'10'}

C:\BigQuery\PythonClient>

If you want to delve into BigQuery API, here is the link to start.